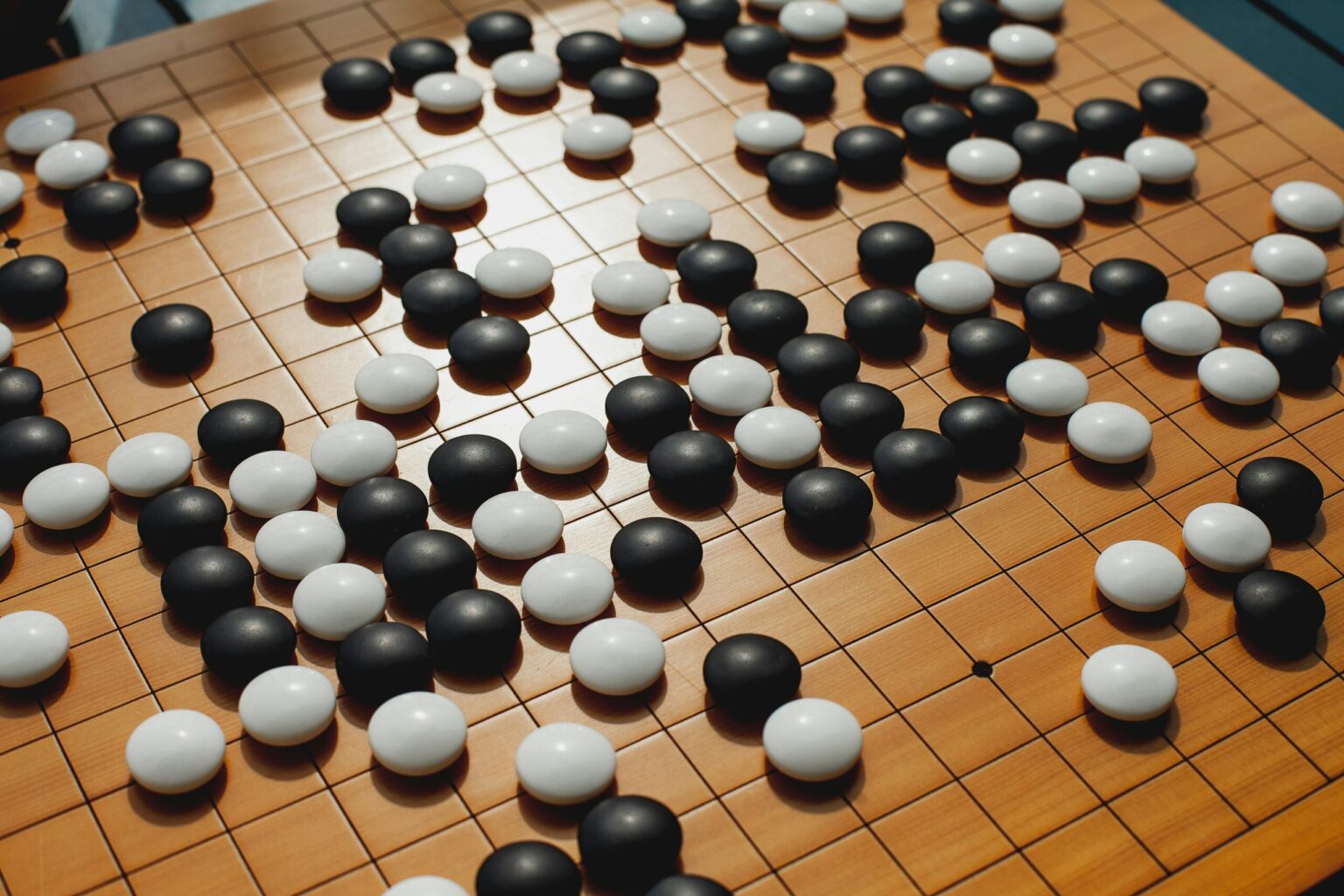

In , Go world champion Lee Sedol faced an opponent who was not made of flesh and blood – but of lines of code.

It soon became clear that the human had lost.

In the end, Lee Sedol lost 4:1.

Last week I watched the documentary AlphaGo again — and found it fascinating once more.

The scary thing about it? AlphaGo didn’t get its style of play from databases, rules or strategy books.

Instead, it had played against itself millions of times — and learned how to win in the process.

Move 37 in game 2 was the moment when the whole world understood: This AI doesn’t play like a human — it plays better.

AlphaGo combined supervised learning, reinforcement learning, and search. One fascinating part is, its strategy emerged from learning by playing against itself — using reinforcement learning to improve over time.

We now use reinforcement learning not only in games, but also in robotics, such as gripper arms or household robots, in energy optimization, e.g. to reduce the energy consumption of data centers or for traffic control, e.g. through traffic light optimization.

And also in modern agents, we now use large language models together with reinforcement learning (e.g. Reinforcement Learning from Human Feedback) to make the responses of ChatGPT, Claude, or Gemini more human-like, for example.

In this article, I’ll show you exactly how this works, and how we can better understand the mechanism using a simple game: Tic Tac Toe.

What is reinforcement learning?

When we observe a baby learning to walk, we see: It stands up, falls over, tries again — and at some point takes its first steps.

No teacher shows the baby how to do it. Instead, the baby tries out different actions by trial and error to walk — .

When it can stand or walk a few steps, this is a reward for the baby. After all, its goal is to be able to walk. If it falls down, there is no reward.

This learning process of trial, error and reward is the basic idea behind reinforcement learning (RL).

Reinforcement learning is a learning approach in which an agent learns through interaction with its environment, which actions lead to rewards.

Its goal: To obtain as many rewards as possible in the long term.

- In contrast to supervised learning, there are no “right answers” or labels. The agent has to find out for itself which decisions are good.

- In contrast to unsupervised learning, the aim is not to find hidden patterns in the data, but to carry out those actions that maximize the reward.

How an RL agent thinks, decides — and learns

For an RL agent to learn, it needs four things: An idea of where it currently is (state), what it can do (actions), what it wants to achieve (reward) and how well it has done with a strategy in the past (value).

An agent acts, gets feedback, and gets better.

For this to work, four things are needed:

1) Policy / Strategy

This is the rule or strategy according to which an agent decides which action to perform in a certain state. In simple cases, this is a lookup table. In more complex applications (e.g. with neural networks), it is a function.

2) Reward signal

The reward is the feedback from the environment. For example, this can be +1 for a win, 0 for a draw and -1 for a loss. The agent’s goal is to collect as many rewards as possible over as many steps as possible.

3) Value Function

This function estimates the expected future reward of a state. The reward shows the agent whether the action was “good” or “bad”. The value function estimates how good a state is — not just immediately, but considering future rewards the agent can expect from that state onward. The value function therefore estimates the long-term benefit of a state.

4) Model of the environment

A model tells the agent: “If I do action A in state S, I will probably end up in state S′ and get reward R. ”

In model-free methods like Q-learning, however, this is not necessary.

Exploitation vs. Exploration: Move 37 – And what we can learn from it

You may remember move 37 from game 2 between AlphaGo and Lee Sedol:

An unusual move that looked like a mistake to us humans – but was later hailed as genius.

Why did the algorithm do that?

The computer program was trying out something new. This is called exploration.

Reinforcement learning needs both: An agent must find a balance between exploitation and exploration.

- Exploitation means that the agent uses the actions it already knows.

- Exploration, on the other hand, are actions that the agent tries out for the first time. It tries them out because they could be better than the actions it already knows.

The agent tries to find the optimal strategy through trial and error.

Tic-Tac-Toe with reinforcement learning

Let’s take a look at reinforcement learning with a super well-known game.

You’ve probably played it as a child too: Tic Tac Toe.

The game is perfect as an introductory example, as it doesn’t require a neural network, the rules are clear and we can implement the game with just a little Python:

- Our agent starts with zero knowledge of the game. It starts like a human seeing the game for the first time.

- The agent gradually evaluates each game situation: A score of 0.5 means “I don’t know yet whether I’m going to win here.” A 1.0 means “This situation will almost certainly lead to victory.

- By playing many parties, the agent observes what works – and adapts his strategy.

The goal? For each turn, the agent should choose the action that leads to the highest long-term reward.

In this section, we will build such an RL system step by step and create the file TicTacToeRL.py.

→ You can find all the code in this GitHub repository.

1. Building the environment of the game

In reinforcement learning, an agent learns through interactions with an environment. It determines what a state is (e.g. the current board), which actions are permitted (e.g. where you can place a bet) and what feedback there is on an action (e.g. a reward of +1 if you win).

In theory, we refer to this setup as the Markov Decision Process: A model consists of states, actions and rewards.

First, we create a class TicTacToe. This manages the game board, which we create as a 3×3 NumPy array, and manages the game logic:

- The reset(self) function starts a new game.

- The function available_actions() returns all free fields.

- The function step(self, action, player) executes a game move. Here we return the new state, a reward (1 = win, 0.5 = draw, -10 = invalid move) and the game status. We penalize invalid moves in this example with -10 heavily so that the agent learns to avoid them quickly – a common technique in small RL environments.

- The function check_winner() checks whether a player has three X’s or O’s in a row and has therefore won.

- With render_gui() we display the current board with matplotlib as X and O graphics.

import numpy as np

import matplotlib

matplotlib.use('TkAgg')

import matplotlib.pyplot as plt

import random

from collections import defaultdict

# Tic Tac Toe Spielumgebung

class TicTacToe:

def __init__(self):

self.board = np.zeros((3, 3), dtype=int)

self.done = False

self.winner = None

def reset(self):

self.board[:] = 0

self.done = False

self.winner = None

return self.get_state()

def get_state(self):

return tuple(self.board.flatten())

def available_actions(self):

return [(i, j) for i in range(3) for j in range(3) if self.board[i, j] == 0]

def step(self, action, player):

if self.done:

raise ValueError("Spiel ist vorbei")

i, j = action

if self.board[i, j] != 0:

return self.get_state(), -10, True

self.board[i, j] = player

if self.check_winner(player):

self.done = True

self.winner = player

return self.get_state(), 1, True

elif not self.available_actions():

self.done = True

return self.get_state(), 0.5, True

return self.get_state(), 0, False

def check_winner(self, player):

for i in range(3):

if all(self.board[i, :] == player) or all(self.board[:, i] == player):

return True

if all(np.diag(self.board) == player) or all(np.diag(np.fliplr(self.board)) == player):

return True

return False

def render_gui(self):

fig, ax = plt.subplots()

ax.set_xticks([0.5, 1.5], minor=False)

ax.set_yticks([0.5, 1.5], minor=False)

ax.set_xticks([], minor=True)

ax.set_yticks([], minor=True)

ax.set_xlim(-0.5, 2.5)

ax.set_ylim(-0.5, 2.5)

ax.grid(True, which='major', color='black', linewidth=2)

for i in range(3):

for j in range(3):

value = self.board[i, j]

if value == 1:

ax.plot(j, 2 - i, 'x', markersize=20, markeredgewidth=2, color='blue')

elif value == -1:

circle = plt.Circle((j, 2 - i), 0.3, fill=False, color='red', linewidth=2)

ax.add_patch(circle)

ax.set_aspect('equal')

plt.axis('off')

plt.show()2. Program the Q-Learning Agent

Next, we define the learning part: Our agent

It decides which action to perform in a certain state to get as much reward as possible.

The agent uses the classic RL method Q-learning. A Q-value is stored for each combination of state and action — the estimated long-term benefit of this action.

The most important methods are:

- Using the

choose_action(self, state, actions)function, the agent decides in each game situation whether to choose an action that it already knows well (exploitation) or whether to try out a new action that has not yet been sufficiently tested (exploration).This decision is based on the so-called ε-greedy approach:

With a probability of ε = 0.1 the agent chooses a random action (exploration), with 90 % probability (1 – ε) it chooses the currently best known action based on its Q-table (exploitation).

- With the function

update(state, action, reward, next_state, next_actions)we adjust the Q-value depending on how good the action was and what happens afterwards. This is the central learning step for the agent.

# Q-Learning-Agent

class QLearningAgent:

def __init__(self, alpha=0.1, gamma=0.9, epsilon=0.1):

self.q_table = defaultdict(float)

self.alpha = alpha

self.gamma = gamma

self.epsilon = epsilon

def get_q(self, state, action):

return self.q_table[(state, action)]

def choose_action(self, state, actions):

if random.random() < self.epsilon:

return random.choice(actions)

else:

q_values = [self.get_q(state, a) for a in actions]

max_q = max(q_values)

best_actions = [a for a, q in zip(actions, q_values) if q == max_q]

return random.choice(best_actions)

def update(self, state, action, reward, next_state, next_actions):

max_q_next = max([self.get_q(next_state, a) for a in next_actions], default=0)

old_value = self.q_table[(state, action)]

new_value = old_value + self.alpha * (reward + self.gamma * max_q_next - old_value)

self.q_table[(state, action)] = new_valueOn my Substack, I regularly write summaries about the published articles in the fields of Tech, Python, Data Science, Machine Learning and AI. If you’re interested, take a look or subscribe.

3. Train the agent

The actual learning process begins in this step. During training, the agent learns through trial and error. The agent plays many games, memorizes which actions have worked well — and adapts its strategy.

During training, the agent learns how its actions are rewarded, how its behavior affects later states and how better strategies develop in the long term.

- With the function

train(agent, episodes=10000)we define that the agent plays 10,000 games against a simple random opponent. In each episode, the agent (player 1) makes a move, followed by the opponent (player 2). After each move, the agent learns throughupdate(). - Every 1000 games we save how many wins, draws and defeats there have been.

- Finally, we plot the learning curve with matplotlib. It shows how the agent improves over time.

# Training mit Lernkurve

def train(agent, episodes=10000):

env = TicTacToe()

results = {"win": 0, "draw": 0, "loss": 0}

win_rates = []

draw_rates = []

loss_rates = []

for episode in range(episodes):

state = env.reset()

done = False

while not done:

actions = env.available_actions()

action = agent.choose_action(state, actions)

next_state, reward, done = env.step(action, player=1)

if done:

agent.update(state, action, reward, next_state, [])

if reward == 1:

results["win"] += 1

elif reward == 0.5:

results["draw"] += 1

else:

results["loss"] += 1

break

opp_actions = env.available_actions()

opp_action = random.choice(opp_actions)

next_state2, reward2, done = env.step(opp_action, player=-1)

if done:

agent.update(state, action, -1 * reward2, next_state2, [])

if reward2 == 1:

results["loss"] += 1

elif reward2 == 0.5:

results["draw"] += 1

else:

results["win"] += 1

break

next_actions = env.available_actions()

agent.update(state, action, reward, next_state2, next_actions)

state = next_state2

if (episode + 1) % 1000 == 0:

total = sum(results.values())

win_rates.append(results["win"] / total)

draw_rates.append(results["draw"] / total)

loss_rates.append(results["loss"] / total)

print(f"Episode {episode+1}: Wins {results['win']}, Draws {results['draw']}, Losses {results['loss']}")

results = {"win": 0, "draw": 0, "loss": 0}

x = [i * 1000 for i in range(1, len(win_rates) + 1)]

plt.plot(x, win_rates, label="Win Rate")

plt.plot(x, draw_rates, label="Draw Rate")

plt.plot(x, loss_rates, label="Loss Rate")

plt.xlabel("Episodes")

plt.ylabel("Rate")

plt.title("Lernkurve des Q-Learning-Agenten")

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

4. Visualization of the board

With the main program “if name == ”main“:” we define the starting point of the program. It ensures that the training of the agent runs automatically when we execute the script. And we use the render_gui() method to display the TicTacToe board as a graphic.

# Hauptprogramm

if __name__ == "__main__":

agent = QLearningAgent()

train(agent, episodes=10000)

# Visualisierung eines Beispielbretts

env = TicTacToe()

env.board[0, 0] = 1

env.board[1, 1] = -1

env.render_gui()Execution in the terminal

We save the code in the file TicTacToeRL.py.

In the terminal, we now navigate to the corresponding directory where our TicTacToeRL.py is stored and execute the file with the command “Python TicTacToeRL.py”.

In the terminal, we can see how many games our agent has won after every 1000th episode:

And in the visualization we see the learning curve:

Final Thoughts

With TicTacToe, we use a simple game and some Python — but we can easily see how Reinforcement Learning works:

- The agent starts without any prior knowledge.

- It develops a strategy through feedback and experience.

- Its decisions gradually improve as a result – not because it knows the rules, but because it learns.

In our example, the opponent was a random agent. Next, we could see how our Q-learning agent performs against another learning agent or against ourselves.

Reinforcement learning shows us that machine intelligence is not only created through knowledge or information – but through experience, feedback and adaptation.