Introduction

In the “ever rapidly changing landscape of Data and AI” (!), understanding data and AI architecture has never been more critical. However something many leaders overlook is the importance of data team structure.

While many of you reading this probably identify as the data team, something most don’t realise is how limiting that mindset can be.

Indeed, different team structures and skill requirements significantly impact an organisation’s ability to actually use Data and AI to drive meaningful results. To understand this, it’s helpful to think of an analogy.

Imagine a two-person household. John works from home and Jane goes to the office. There’s a bunch of house admin Jane relies on John to do, which is a lot easier since he’s the one at home most of the time.

Jane and John have kids and after they’re grown up a bit John has twice as much admin to do! Thankfully, the kids are trained to do the basics; they can wash up, tidy and even occasionally do a bit of hoovering with some coercion.

As the kids grow up, John’s parents move in. They’re pretty old, so John looks after them, but fortunately, the kids are basically self-sufficient at this point. Over time John’s role has changed quite a bit! But he’s always made it one happy, nuclear family — thanks to John and Jane.

Back to data — John is a bit like the data team, and everyone else is a domain expert. They rely on John, but in different ways. This has changed a lot over time, and if it hadn’t it could have been a disaster.

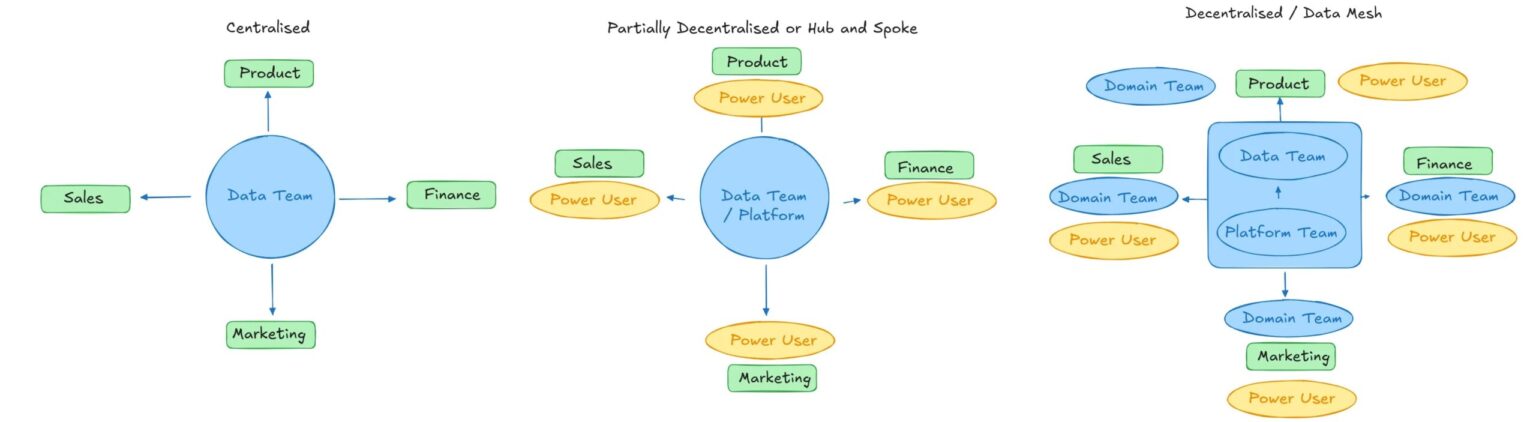

In the rest of this article, we’ll explore John’s journey from a Centralised, through Hub-and-spoke to a Platform mesh-style data team.

Centralised teams

A central team is responsible for a lot of things that will be familiar to you:

- Core data platform and architecture: the frameworks and tooling used to facilitate Data and AI workloads.

- Data and AI engineering: centralising and cleaning datasets; structuring unstructured data for AI workloads

- BI: building dashboards to visualise insights

- AI and ML: the training and deployment of models on the aforementioned clean data

- Advocating for the value of data and training people to understand how to use BI tools

This is a lot of work for a few people! In fact, it’s practically impossible to nail all of this at once. It’s best to keep things small and manageable, focusing on a few key use cases and leveraging powerful tooling to get a head start early.

You might even get a nanny or au Pair to help with the work (in this case — consultants).

But this pattern has flaws. It is easy to fall into the silo trap, a scenario where the central team become a huge bottleneck for Data and AI requests. Data Teams also need to acquire domain knowledge from domain experts to effectively answer requests, which is also time-consuming and hard.

One way out is to expand the team. More people means more output. However, there are better more modern approaches that can make things go even faster.

But there is only one John. So what can he do?

Partially decentralised or hub and spoke

The partially decentralised setup is an attractive model for medium-sized organisations or small, tech-first ones where there are technical skills outside of the data team.

The simplest form has the data team maintaining BI infrastructure, but not the content itself. This is left to ‘power users’ that take this into their own hands and build the BI themselves.

This, of course, runs into all kinds of issues, such as the silo trap, data discovery, governance, and confusion. Confusion is especially painful when people who are told to self-serve try and fail due to a lack of understanding of the data.

An increasingly popular approach is for additional layers of the stack to be opened up. There is the rise of the analytics engineer and data analysts are increasingly taking on more responsibility. This includes using tools, doing data modelling, building end-to-end pipelines, and advocating to the business.

This has led to enormous problems when implemented incorrectly. You wouldn’t let your five-year-old son look after the care of your elders and take care of the house unattended.

Specifically, a lack of basic data modelling principles and data warehouse engines leads to model sprawl and spiralling costs. There are two classic examples.

One is when multiple people try to define the same thing, such as revenue. marketing, finance, and product all have a different version. This leads to inevitable arguments at quarterly business reviews when every department reports with a different number — analysis paralysis.

The other is rolling counts. Let’s say finance wants revenue for the month, but product wants to know what it is on a rolling seven-day basis. “That’s easy,” says the analyst. “I’ll just create some materialised views with these metrics in them”.

As any data engineer knows, this rolling counts operation is pretty expensive, especially if the granularity needs to be by day or hour, since then you need a calendar to ‘fan out’ the model. Before you know it there are rolling_30_day_sales , rolling_7_day_sales , rolling_45_day_sales and so on. These models cost an order of magnitude more than was required.

Simply asking for the lowest granularity required (daily), materialising that, and creating views downstream can solve this problem but would require some central resource.

An early Hub and Spoke model must have a clear delineation of responsibility if the knowledge outside the data team is young or juvenile.

As teams grow, legacy, code-only frameworks like Apache Airflow also give rise to a problem: a lack of visibility. People outside the data team seeking to understand what is going will be reliant on additional tools to understand what happens end-to-end, since legacy UIs do not aggregate metadata from different sources.

It is imperative to surface this information to domain experts. How many times have you been told the ‘data doesn’t look right’, only to realise after tracing everything manually that it was an issue on the data producer side?

By increasing visibility, domain experts are connected directly to owners of source data or processes, which allows fixes to be faster. This removes unnecessary load, context switching, and tickets for the data team.

Hub and spoke (pure)

A pure hub and spoke is a bit like delegating your teenage children with specific responsibilities within clear guardrails. You don’t just give them tasks to do like taking the bins out and cleaning their room — you ask for what you want, like a “clean and tidy room,” and you trust them to do it. Incentives work well here.

In a pure hub and spoke approach, the data team administers the platform and lets others use it. They build the frameworks for building and deploying AI and Data pipelines, and manage access control.

Domain experts can build stuff end-to-end if they need to. This means they can move data, model it, orchestrate the pipeline, and activate it with AI or dashboards as they see fit.

Often, the central team will also do a bit of this. Where data models across domains are complex and overlapping, they should almost always take ownership of delivering core data models. The tail should not wag the dog.

This starts to resemble a data product mindset — while a finance team could take ownership for investing and cleaning ERP data, the central team would own an important data products like the customers table or invoices table.

This structure is very powerful as it is very collaborative. It often works only if domain teams have a reasonably high degree of technical proficiency.

Platforms that allow use of code and no-code together are recommended here, otherwise a hard technical dependency on the central team will always exist.

Another characteristic of this pattern is training and support. The central team or hub will spend some time supporting and upskilling the spokes to build AI and Data workflows efficiently within guardrails.

Again, providing visibility here is hard with legacy orchestration frameworks. Central teams will be burdened with keeping metadata stores up-to-date, like Data Catalogs, so business users can understand what is going on.

The alternative — upskilling domain experts to have deep python expertise learning frameworks with steep learning curves, is even harder to pull off.

Platform mesh/data product

The natural endpoint in our theoretical household journey takes us to the much-criticised Data Mesh or Platform Mesh approach.

In this household, everyone is expected to know what their responsibilities are. Children are all grown up and can be relied on to keep the house in order and look after its inhabitants. There is close collaboration and everyone works together seamlessly.

Sounds pretty idealistic, don’t you think!?

In practice, it’s rarely this easy. Allowing satellite teams to use their own infrastructure and build whatever they want is a surefire way to lose control and slow things down.

Even if you were to standardise tooling across teams, best practices would still suffer.

I’ve spoken to countless teams in massive organisations such as retail chains or airlines, and avoiding a mesh is not an option because multiple business divisions depend on each other.

These teams use different tools. Some leverage Airflow instances and legacy frameworks built by consultants years ago. Others use the latest tech and a full, bloated, Modern Data Stack.

They all struggle with the same problem; collaboration, communication, and orchestrating flows across different teams.

Implementing a single overarching platform for building Data and AI workflows here can help. A unified control plane is almost like an orchestrator of orchestrators, that aggregates metadata across different places and shows end to end lineage across domains.

Naturally it makes for an effective control plane where anyone can gather to debug failed pipelines, communicate, and recover — all without relying on a central Data Engineering Team who would otherwise be a bottleneck.

There are clear analogies for this in software engineering. Often, code results in logs that are collated by a single tool such as DataDog. These platforms provide a single place to see everything happening (or not happening), alerts, and collaboration for incident resolution.

Summary

Organisations are like families. As much as we like the idea of one, big, happy, self-sufficient family, there are often responsibilities we need to bear to make things work out initially.

As they mature, members get closer to independence, like John’s kids. Others find their place as dependent but loyal stakeholders, like John’s parents.

Organisations are no different. Data Teams are maturing away from do-ers in Centralised Teams to Enablers in Hub and Spoke architectures. Eventually, most organisations will have dozens if not hundreds of people who are pioneering Data and AI workflows in their own spokes.

Once this happens, it’s likely that how Data and AI is used in small, agile organisations will resemble the complexity of much larger enterprises where collaboration and orchestration across different teams is inevitable.

Understanding where organisations are in relation to these patterns is imperative. Trying to force a Data-as-Product mindset on an immature company, or sticking to a large central team in a large and mature organisation will result in disaster.

Good luck 🍀