At the heart of every successful AI Agent lies one essential skill: prompting (or “prompt engineering”). It is the method of instructing LLMs to perform tasks by carefully designing the input text.

Prompt engineering is the evolution of the inputs for the first text-to-text NLP models (2018). At the time, developers typically focused more on the modeling side and feature engineering. After the creation of large GPT models (2022), we all started using mostly pre-trained tools, so the focus has shifted on the input formatting. Hence, the “prompt engineering” discipline was born, and now (2025) it has matured into a blend of art and science, as NLP is blurring the line between code and prompt.

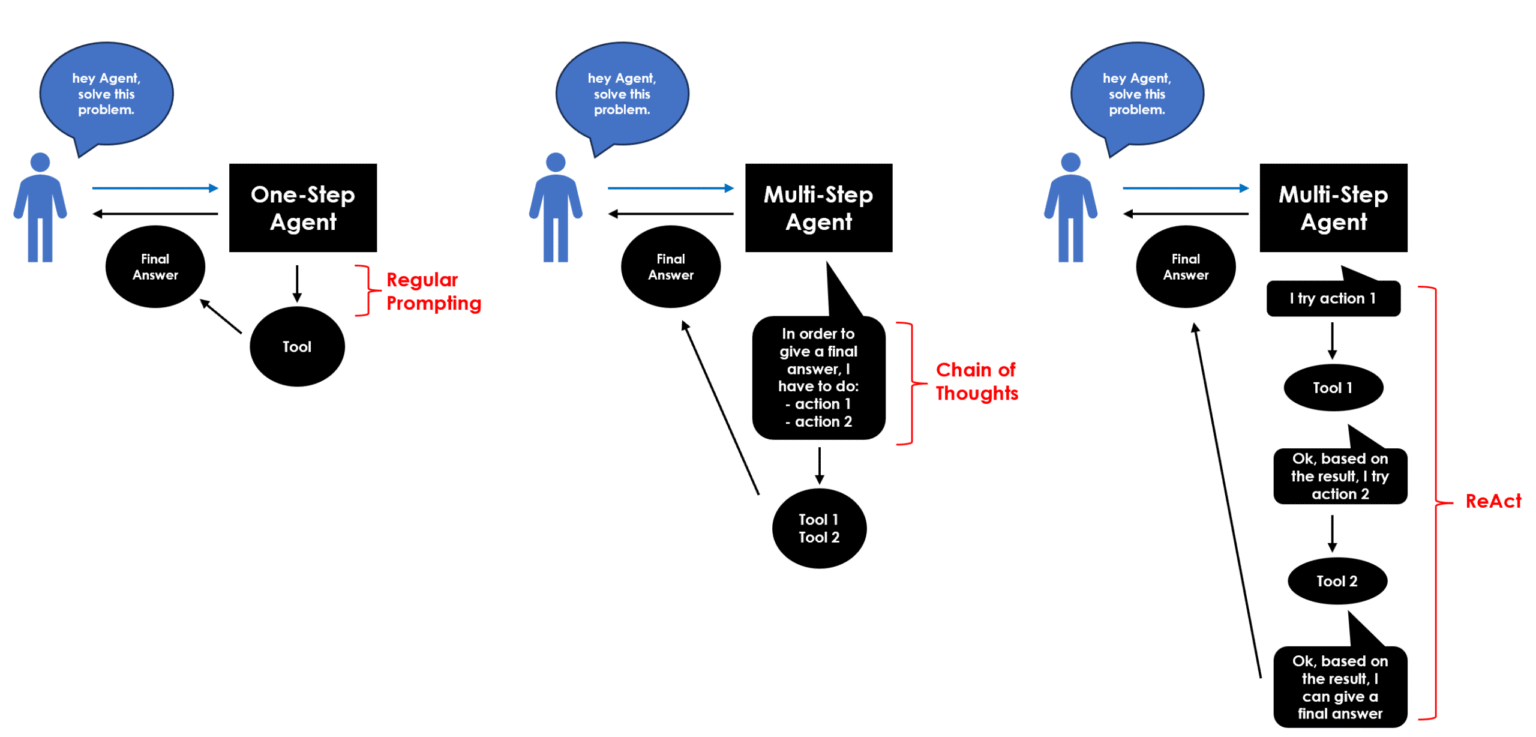

Different types of prompting techniques create different types of Agents. Each method enhances a specific skill: logic, planning, memory, accuracy, and Tool integration. Let’s see them all with a very simple example.

## setup

import ollama

llm = "qwen2.5"

## question

q = "What is 30 multiplied by 10?"Main techniques

1) “Regular” prompting – just ask a question and get a straightforward answer. Also called “Zero-Shot” prompting specifically when the model is given a task without any prior examples of how to solve it. This basic technique is designed for One-Step Agents that execute the task without intermediate reasoning, especially early models.

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content':q}

])

print(response['message']['content'])

2) ReAct (Reason+Act) – a combination of reasoning and action. The model not only thinks through a problem but also takes action based on its reasoning. So, it’s more interactive as the model alternates between reasoning steps and actions, refining its approach iteratively. Basically, it’s a loop of thought-action-observation. Used for more complicated tasks, like searching the web and making decisions based on the findings, and typically designed for Multi-Step Agents that perform a series of reasoning steps and actions to arrive at a final result. They can break down complex tasks into smaller, more manageable parts that progressively build upon one another.

Personally, I really like ReAct Agents as I find them more similar to humans because they “f*ck around and find out” just like us.

prompt = '''

To solve the task, you must plan forward to proceed in a series of steps, in a cycle of 'Thought:', 'Action:', and 'Observation:' sequences.

At each step, in the 'Thought:' sequence, you should first explain your reasoning towards solving the task, then the tools that you want to use.

Then in the 'Action:' sequence, you shold use one of your tools.

During each intermediate step, you can use 'Observation:' field to save whatever important information you will use as input for the next step.

'''

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content':q+" "+prompt}

])

print(response['message']['content'])

3) Chain-of-Thought (CoT) – a reasoning pattern that involves generating the process to reach a conclusion. The model is pushed to “think out loud” by explicitly laying out the logical steps that lead to the final answer. Basically, it’s a plan without feedback. CoT is the most used for advanced tasks, like solving a math problem that might need step-by-step reasoning, and typically designed for Multi-Step Agents.

prompt = '''Let’s think step by step.'''

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content':q+" "+prompt}

])

print(response['message']['content'])

CoT extensions

From the Chain-of-Technique derived several other new prompting approaches.

4) Reflexion prompting that adds an iterative self-check or self-correction phase on top of the initial CoT reasoning, where the model reviews and critiques its own outputs (spotting mistakes, identifying gaps, suggesting improvements).

cot_answer = response['message']['content']

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content': f'''Here was your original answer:\n\n{cot_answer}\n\n

Now reflect on whether it was correct or if it was the best approach.

If not, correct your reasoning and answer.'''}

])

print(response['message']['content'])

5) Tree-of-Thoughts (ToT) that generalizes CoT into a tree, exploring multiple reasoning chains simultaneously.

num_branches = 3

prompt = f'''

You will think of multiple reasoning paths (thought branches). For each path, write your reasoning and final answer.

After exploring {num_branches} different thoughts, pick the best final answer and explain why.

'''

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content': f"Task: {q} \n{prompt}"}

])

print(response['message']['content'])

6) Graph‑of‑Thoughts (GoT) that generalizes CoT into a graph, considering also interconnected branches.

class GoT:

def __init__(self, question):

self.question = question

self.nodes = {} # node_id: text

self.edges = [] # (from_node, to_node, relation)

self.counter = 1

def add_node(self, text):

node_id = f"Thought{self.counter}"

self.nodes[node_id] = text

self.counter += 1

return node_id

def add_edge(self, from_node, to_node, relation):

self.edges.append((from_node, to_node, relation))

def show(self):

print("\n--- Current Thoughts ---")

for node_id, text in self.nodes.items():

print(f"{node_id}: {text}\n")

print("--- Connections ---")

for f, t, r in self.edges:

print(f"{f} --[{r}]--> {t}")

print("\n")

def expand_thought(self, node_id):

prompt = f"""

You are reasoning about the task: {self.question}

Here is a previous thought node ({node_id}):\"\"\"{self.nodes[node_id]}\"\"\"

Please provide a refinement, an alternative viewpoint, or a related thought that connects to this node.

Label your new thought clearly, and explain its relation to the previous one.

"""

response = ollama.chat(model=llm, messages=[{'role':'user', 'content':prompt}])

return response['message']['content']

## Start Graph

g = GoT(q)

## Get initial thought

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content':q}

])

n1 = g.add_node(response['message']['content'])

## Expand initial thought with some refinements

refinements = 1

for _ in range(refinements):

expansion = g.expand_thought(n1)

n_new = g.add_node(expansion)

g.add_edge(n1, n_new, "expansion")

g.show()

## Final Answer

prompt = f'''

Here are the reasoning thoughts so far:

{chr(10).join([f"{k}: {v}" for k,v in g.nodes.items()])}

Based on these, select the best reasoning and final answer for the task: {q}

Explain your choice.

'''

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content':q}

])

print(response['message']['content'])

7) Program‑of‑Thoughts (PoT) that specializes in programming, where the reasoning happens via executable code snippets.

import re

def extract_python_code(text):

match = re.search(r"```python(.*?)```", text, re.DOTALL)

if match:

return match.group(1).strip()

return None

def sandbox_exec(code):

## Create a minimal sandbox with safety limitation

allowed_builtins = {'abs', 'min', 'max', 'pow', 'round'}

safe_globals = {k: __builtins__.__dict__[k] for k in allowed_builtins if k in __builtins__.__dict__}

safe_locals = {}

exec(code, safe_globals, safe_locals)

return safe_locals.get('result', None)

prompt = '''

Write a short Python program that calculates the answer and assigns it to a variable named 'result'.

Return only the code enclosed in triple backticks with 'python' (```python ... ```).

'''

response = ollama.chat(model=llm, messages=[

{'role':'user', 'content': f"Task: {q} \n{prompt}"}

])

print(response['message']['content'])

sandbox_exec(code=extract_python_code(text=response['message']['content']))

Conclusion

This article has been a tutorial to recap all the major prompting techniques for AI Agents. There’s no single “best” prompting technique as it depends heavily on the task and the complexity of the reasoning needed.

For example, simple tasks, like summarization and translation, are easiy performed with a Zero-Shot/Regular prompting, while CoT works well for math and logic tasks. On the other hand, Agents with Tools are typically created with ReAct mode. Moreover, Reflexion is most appropriate when learning from mistakes or iterations improves results, like gaming.

In terms of versatility for complex tasks, PoT is the real winner because it is solely based on code generation and execution. In fact, PoT Agents are getting closer to replace humans in several office taks.

I believe that, in the near future, prompting won’t just be about “what you say to the model”, but about orchestrating an interactive loop between human intent, machine reasoning, and external action.

Full code for this article: GitHub

I hope you enjoyed it! Feel free to contact me for questions and feedback or just to share your interesting projects.

👉 Let’s Connect 👈

(All images are by the author unless otherwise noted)